Why Cities: Skylines 2 performs poorly

The teeth are not the only problem

44 min readTable of contents

- (This is not) a performance review

- Pulling back the curtain

-

Renderdoc analysis

- DOTS instance data update

- Simulation

- Virtual texturing cache update

- Skybox generation

- Pre-pass

- The teeth controversy

- Pre-pass continued, featuring the high poly hall of shame

- Motion vectors

- Roads and decals

- Main pass

- Ambient occlusion

- Cascaded shadow mapping

- Screen space reflections and global illumination

- Deferred lighting

- Weird clothing pass

- Sky rendering

- Transparent objects pre-pass

- Water rendering

- Particles, rain and transparent objects

- VT feedback processing

- Bunch of post-processing

- Outlines, text and other UI

- Summary and conclusions

-

Added on 2023-11-06: FAQ and other notes

- You mentioned you got 7 FPS in the main menu. Why was it so slow?

- How many total vertices and triangles are there in the scene?

- Do you think the game should have been made with Unreal Engine 5 or some other engine instead?

- Renderdoc is unreliable for benchmarking, you should have used [insert other tool here] instead.

- something something can’t believe this game has no LODs anywhere

- You mentioned the game includes InstaLOD. What is its role in the game?

- I hate JavaScript. Hating JavaScript and / or the modern web is one of my most defining personality traits. The game uses JavaScript for the UI, which is probably why it’s so slow and ugly.

- How many question can you come up with? Don’t you have anything better to do?

One of the most highly anticipated PC games of the year Cities: Skylines 2 was released last week to a mixed reception. My impression is that gameplay and simulation-wise it seems to be a step in the right direction, and at least on paper the game seems more well-rounded in terms of features than the original was at launch. There are however significant issues with the game, ranging from balance problems and questionable design choices to bugs rendering a lot of the game’s economic simulation almost pointless. Whether or not the game is a worthy successor to the original is an open question at this point, but one thing is almost universally agreed upon: the title’s performance is not up to par.

(This is not) a performance review

There were warning signs. Under a month before release date there was an announcement informing that the game’s recommended system requirements had been raised, and the console release was delayed to 2024. Quite a few YouTubers and streamers got early access to the game, but they were explicitly forbidden to talk about performance until the regular review embargo was lifted. This wasn’t exceptional as games tend to receive frequent performance optimizations and other fixes in the last few weeks before release, but it wasn’t a good sign either. Then, just a week before release Colossal Order issued a statement which I’d describe as a pre-emptive apology for the poor performance of the game. And then the game was released.

Simulation-heavy games like city builders can be surprisingly hard to run at a good framerate, but what makes Cities: Skylines 2 stand out is that on most systems and in most situations the game is GPU-bound — rather unusual for a game of this genre, as most games like this tend to be really heavy on the CPU (such as the original Cities: Skylines), but relatively light on graphics. Visually the game is an improvement on most aspects compared to the 2015 original, but nothing really justifies the game being harder to run than maxed out Cyberpunk 2077 with path tracing enabled. Personally I’d even argue that C:S2 looks quite unpleasant; while individual models are relatively detailed and the sense of scale is impressive, the shading is decidedly last-gen and the screen is absolutely covered in rendering artifacts and poorly filtered textures. Comparing the game’s graphics to a relatively close competitor Anno 1800 (which was released in 2019) doesn’t do it any favours. Anno goes for a slightly more stylized look and in my humble opinion manages to look more polished and consistent, while offering decent performance even on hardware that was considered low-to-mid range in 2019.

I’m not going to waste my time meticulously benchmarking the game because many others have done that already and to a significantly higher standard than I could. If you are interested in that, check out this written article from PC Games Hardware (in German) or this video from Gamers Nexus (in Americanese). I’ll summarize the results as such: when the settings are turned above the bare minimum (the “very low” graphics preset completely disables decadent luxuries such as shadows and fog) you need a graphics card that costs about 1000 to 2000 euros to run at the game at 60 frames per second at 1080p. As a comparison similar hardware in Alan Wake 2 — which was released the same week as C:S2 and is considered by some to be the best looking game of this console generation — reaches comparable average framerates with all settings cranked including path tracing, either at 1440p without any upscaling magic or at 4K with some help from DLSS. I think that’s a good illustration of how bizarrely demanding C:S2 is.

Some personal experiences to cap off this introduction: when I started the game for the first time on my relatively beefy gaming PC (equipped with an NVidia RTX 3080 graphics card, an AMD Ryzen 7 5800X CPU and a 5120x1440 super ultrawide monitor) I was greeted by a frame rate of under 10 FPS in the main menu. After tweaking the settings as instructed by the developer (which involved disabling depth of field, motion blur and volumetric effects) my FPS reached almost 90. What made this especially bizarre was that the menu features just a static background image and a few buttons. Loading into an empty map gave me about 30 to 40 FPS, and the frame rate stayed around that level after playing for about an hour, with the occasional stutter. Let’s investigate.

Pulling back the curtain

Cities: Skylines 2 like its predecessor is made in Unity, which means the game can be decompiled and inspected quite easily using any .NET decompiler. I used JetBrains dotPeek which has a decent Visual Studio -like UI with a large variety of search and analysis options. However static analysis doesn’t really tell us anything concrete about the rendering performance of the game. To analyze what’s going with rendering I used Renderdoc, an open source graphics debugger which has saved my bacon with some of my previous GPU-y personal projects.

Engine and architecture

Let’s go through some of the technical basics of the game. Cities: Skylines 2 uses Unity 2022.3.7, which is just a few months old at the time of writing. The most notable aspect of Unity 2022 was the stabilization of the DOTS set of technologies which Unity has been working on for several years, and interestingly C:S2 seems to be largely built on top of this stuff, including the newfangled Entity Component System (ECS) implementation and Burst compiler. I’ve been interested in the ECS architecture for a few years and have experimented with implementations such as Specs, Legion and most recently Bevy, and if it weren’t for the many issues in this game I’d rather be writing about how ECS is basically the ideal architecture for a game like this. Cities: Skylines 2 seems to use DOTS to great effect as the game makes use of multiple CPU cores much more efficiently than its predecessor. Unfortunately a lot of the graphics-related issues are indirectly caused by the game’s use of DOTS. I’ll expand on that later.

The game also utilizes a bunch of third party middleware and some custom / forked libraries. Unlike DOTS, Unity’s UI Toolkit is apparently still not ready for production as C:S2 uses HTML, CSS and JavaScript based Coherent Gameface (what a name!) for its user interfaces. A brief glance at the JS bundle reveals that they are using React and bundling using Webpack. While this is something that is guaranteed to make the average native development purist yell at clouds and complain that the darned kids should get off their lawn, I think at least on paper this will make the game’s UIs significantly easier to maintain and modify than before. Other notable bundled libraries include InstaLOD, Odin Serializer, and a DLL file for NVidia DLSS 3, even though the technology is not currently supported by the game.

For graphics rendering the game makes use of Direct3D 11 and Unity’s High Definition Rendering Pipeline, also known as HDRP. Unity’s regular rendering system only works with traditional MonoBehaviour-based game objects, so a game built using DOTS & ECS needs something to bridge the gap. Unity has a package called Entities Graphics, but surprisingly Cities: Skylines 2 doesn’t seem to use that. The reason might be its relative immaturity and its limited set of supported rendering features; according to the feature matrix both skinning (used for animated models like characters) and occlusion culling (not rendering things that are behind other things) are marked as experimental, and virtual texturing (making GPU texture handling more complex but hopefully more efficient) is not supported at all. Instead it seems that Colossal Order decided to implement the glue between the ECS and the renderer by themselves, utilizing BatchRendererGroup and a lot of relatively low level code. I’ll cover this and its many implications in more detail later.

Attachment issues

Getting Renderdoc attached to a process and collecting rendering events is usually quite trivial. Normally you just need to provide Renderdoc the path of the executable, the working directory and some command line arguments, and then Renderdoc starts the binary and injects itself to the game process. However, my issue was that I had the game on Xbox Game Pass, which does some weird sandboxing and / or NTFS ownership magic to limit what you can do with the game files. Renderdoc was not allowed to read the game’s executable, even when running as administrator. Before I knew Game Pass was the problem I also tried to use NVidia Nsight Graphics™️ instead (a tool similar to Renderdoc from NVidia), but it had the same issue. Ultimately I ended up solving that particular problem with my credit card: I bought the game again on Steam at full price, despite knowing it had severe issues. Sorry.

However, the Steam version didn’t immediately start co-operating either. This time the problem was Paradox Launcher, a little piece of bloatware used in most big budget Paradox-published titles. The launcher binary is also included in the Game Pass version, but at least on release it seemed to be completely unused. Basically when you start C:S2 from Steam it pops up Paradox Launcher, you click either Resume or Play, and then it actually runs the game binary. I tried to attach Renderdoc by running Cities2.exe directly but that didn’t work — it creates the game window, but then runs for a few seconds, opens the launcher and then exits. There is an option in Renderdoc called “Capture child processes” which should in theory make Renderdoc inject itself to all processes started by the target process — so it should attach itself to the launcher started by the game binary, and then get injected to the game binary again — but I think there was some extra layer of indirection which unfortunately prevented that from working. I configured Renderdoc to start Paradox Launcher directly, but in short that didn’t work either, as Steam and the launcher do some communication to select which game to start and to handle authentication / DRM thingies. Some of that communication happens through command line arguments which I was able to extract using Process Explorer, but reusing the same arguments didn’t work either, so I gave up on that approach as well.

Ultimately I was able to finally attach Renderdoc by using the Global Process Hook option, which the program hides by default and advices against using. It is a very invasive method of hooking as it injects a DLL to every single process that is started on the system, but hey, it worked! We can finally see what’s going on.

This place is a message... and part of a system of messages... pay attention to it! Sending this message was important to us. We considered ourselves to be a powerful culture. This place is not a place of honor... no highly esteemed deed is commemorated here... nothing valued is here.

I was later able to get NVidia Nsight Graphics™️ working as well. Instead of trying to start the game or the launcher, I opened Steam from Nsight and then started the game from Steam’s UI as usual. Ultimately I wasn’t able to get much more information out of Nsight than I already had from Renderdoc, as it seems that many of NSight’s profiling and performance focused features are not supported with D3D11.

Renderdoc analysis

I’ll preface this section by admitting that I’m not a professional graphics programmer nor even a particularly proficient hobbyist. I do graphics programming occasionally and have spent quite a lot of time toying with game engines, but I’m not an expert on either subject. I have never implemented Actual Proper Graphics Things like deferred rendering or cascaded shadow mapping, though I think I know how they should work in theory. So as difficult it might be to believe, there is a chance that I’m wrong about some of the things I’m about to say. If you think I’m wrong, please let me know!

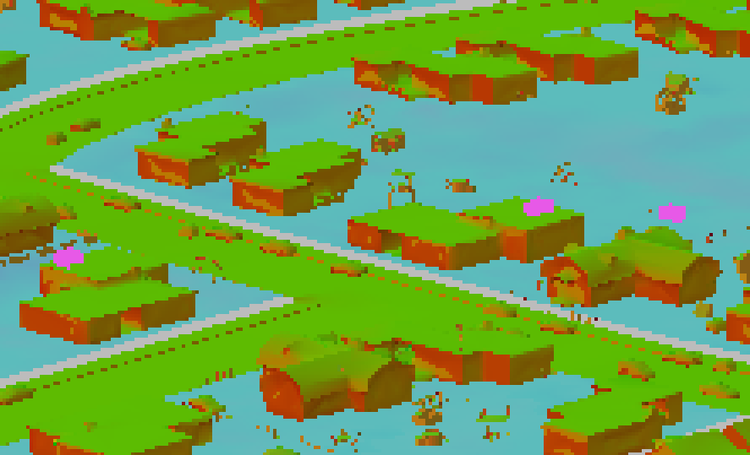

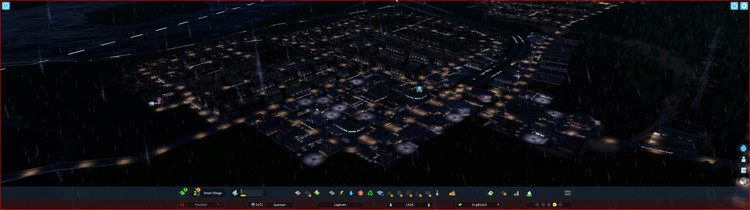

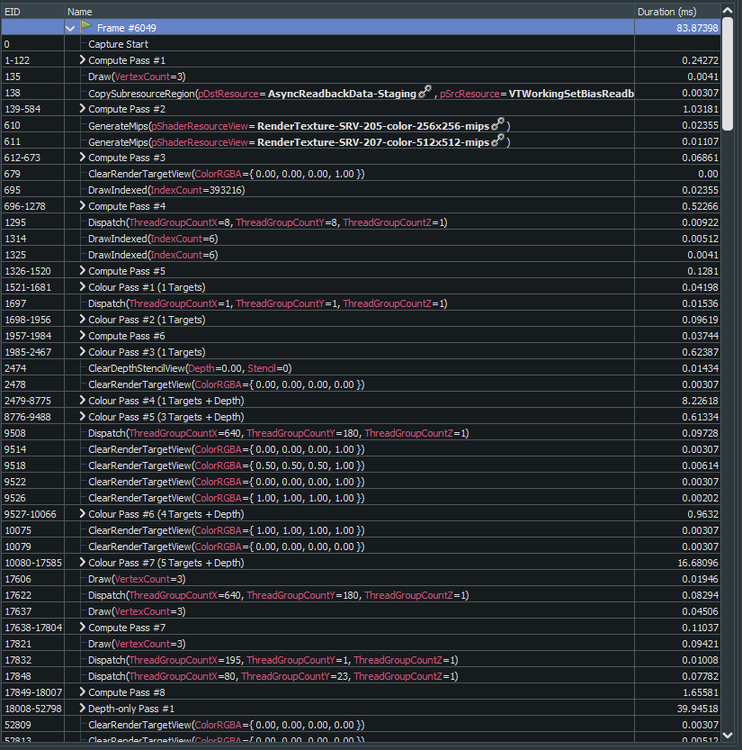

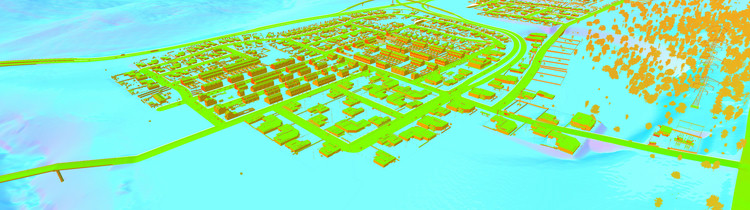

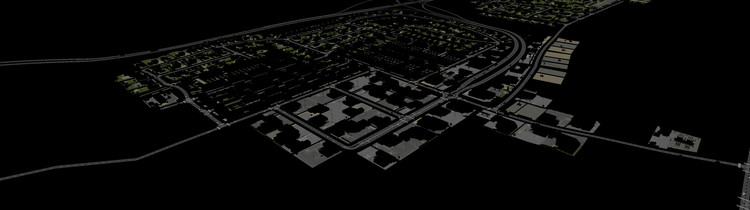

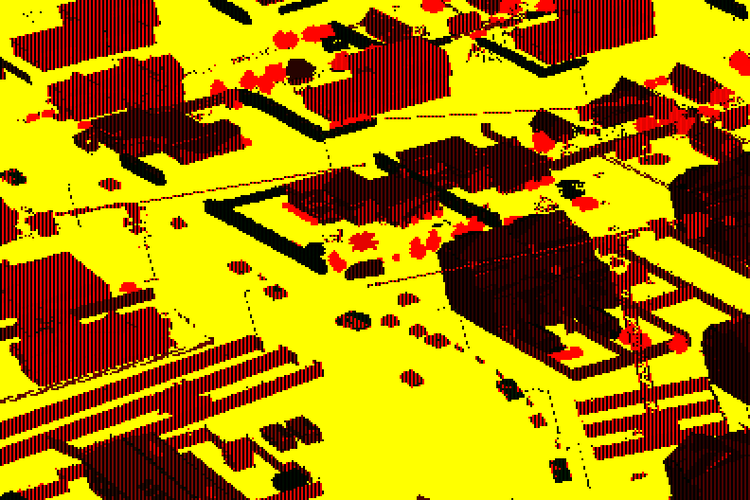

Let’s begin by analyzing the following frame (click to open it as a new tab):

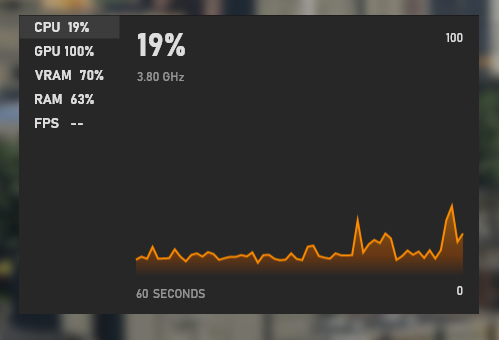

This is a decently complex frame, but it’s far from the scale the game can actually reach. This was captured in a town of about 1000 inhabitants under an hour into a new save. There’s rain and it’s night time, but in my experience neither moves the needle much in terms of performance. The game version was 1.0.11f1, so the first post-release hotfix is included. It should be noted that latest patch at the time of publication (1.0.12f1) was released during the making of this article and it includes some improvements for the issues I’m about to describe, but it’s far from having solved all of them.

Renderdoc reports that the frame took about 87.8 milliseconds to render, which would average to about 11.4 FPS. The game was running at 30-40 FPS on average at the time, so either this frame is an outlier (which — as we’ve learned from the Gamers Nexus video — is ironically quite common) or perhaps more likely Renderdoc adds a bit of overhead in a way that affects the measurements, as all of the frames I’ve captured have reported slightly higher frame times than what I’ve seen in-game when playing normally. I’m making the assumption that even if Renderdoc does add some overhead, it adds it in a way that doesn’t completely invalidate the measurements, like making specific API calls take 10x longer than they normally would.

For reference, at consistent 60 FPS the frametime should always be about 1000 / 60 = 16.666... milliseconds.

Here are some basic rendering statistics reported by Renderdoc:

Draw calls: 6705

Dispatch calls: 191

API calls: 53361

Index/vertex bind calls: 8724

Constant bind calls: 25006

Sampler bind calls: 563

Resource bind calls: 13451

Shader set calls: 1252

Blend set calls: 330

Depth/stencil set calls: 301

Rasterization set calls: 576

Resource update calls: 1679

Output set calls: 739

API:Draw/Dispatch call ratio: 7.73796

342 Textures - 3926.25 MB (3924.10 MB over 32x32), 180 RTs - 2327.51 MB.

Avg. tex dimension: 1611.08x2212.36 (2133.47x2984.88 over 32x32)

4144 Buffers - 446.59 MB total 6.48 MB IBs 43.35 MB VBs.

6700.34 MB - Grand total GPU buffer + texture load.

There’s not much we can deduce from these figures alone. 6705 draw calls and over 50000 API calls both sound like a lot, but without further context their cost is hard to evaluate. 6.7 gigabytes of used video memory is a lot for a relatively simple scene like this, especially considering there are still current generation mid-tier graphics cards with only 8 gigabytes of VRAM.

Since the game uses HDRP its documentation might serve as a good starting point for understanding the different rendering and compute passes the game perform on each frame. I’m not going to do a fancy graphics study like these legendary ones for DOOM 2016 and GTA V, but I’ll go through most of the rendering process step by step and highlight some of the more interesting things along the way.

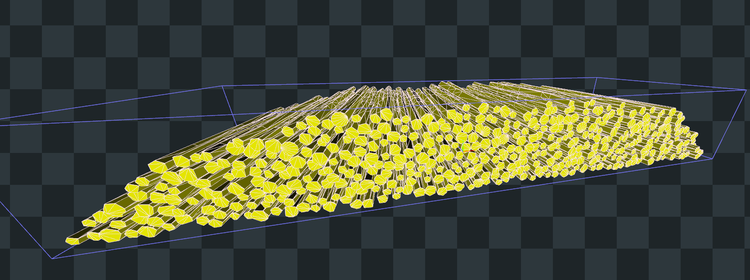

DOTS instance data update

Almost every draw call the game makes uses instancing, which is required in a game of this scale. To make instancing work the game has a single large buffer of instance data which contains everything necessary for rendering any and all objects. The contents and size of per-instance data varies by the type of entity but it seems that regular game objects like buildings take about 50 floats per instance, roads significantly more. I haven’t fully figured out how the buffer is managed because it’s a very complex system, but essentially the instance data for every visible object is updated to the buffer each frame, and the changes are then uploaded to the GPU. The buffer starts at about 60 megabytes, and is reallocated to a larger size when necessary.

The buffer is used for practically every draw call the game makes, and according to Renderdoc it’s at least available in every vertex and pixel shader, though I would assume it’s primarily only used in vertex shaders. It would be interesting to know how this buffer affects GPU’s cache as I would assume instances are not laid out in the buffer in the same order they are rendered and that could be a problem for caching, but I lack the expertise to figure that out. Regardless there is a certain cost associated with looking up data from this buffer for every vertex, and it might explain some of the issues regarding high poly meshes I’ll get to soon.

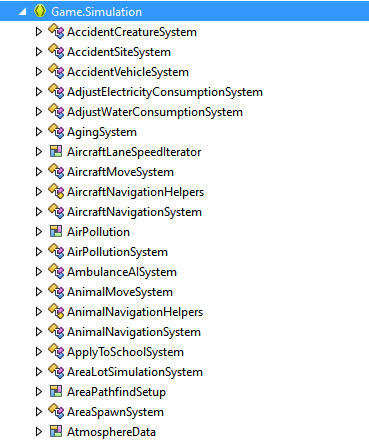

Simulation

Several compute shaders are used for graphics-related simulations, such as water, snow and particles, as well as skeletal animation. These take about 1.5 milliseconds in total, which is under 2% of frame time.

One early theory regarding the game’s poor performance was that maybe it was offloading a lot of the actual game simulation to the GPU, saving CPU time but taking processing power away from rendering. However, I can conclude based on both decompiled code and GPU calls that this is simply not the case.

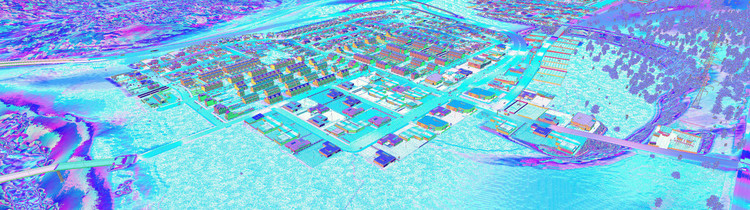

Virtual texturing cache update

Remember how I mentioned that virtual texturing is not supported by Entities Graphics? Well, it seems that C:S2 implements its own virtual texturing / texture streaming system. I first assumed that the game is using Unity’s built-in solution for that, but in traditional Unity fashion even though it was added to the engine in 2020 following an acquisition it remains as experimental and unsupported as ever (if not more so).

What is virtual texturing, anyway? My understanding is that virtual texturing is an approach for loading and managing texture data in a potentially more memory efficient way than the méthode traditionnelle of using one GPU texture per texture asset. Textures are stored in texture atlases, which are basically fancier versions of sprite sheets (which I also happened to cover in my GPU tile mapping article). Atlases consist of tiles of a fixed size, and each tile can contain one or more textures. The trick which can save memory is that large textures can be split into multiple tiles, so if you have a large texture that is only visible in a small portion of the screen, you only need to load the tiles that are actually visible. Virtual texture visibility information is produced as a side product of normal rendering in a later pass, and the visibility information is used on the CPU side to determine which tiles need to be loaded and which can be unloaded. If you want to know more, Unreal Engine’s documentation seems to offer a great description of the technique in more detail. The game seems to use virtual texturing for all static 3D objects except the terrain.

This approach to texturing is quite elegant in theory but it comes with many tradeoffs, and the game’s implementation still has some teething issues, such as high resolution textures sometimes failing to load even when the surface is close to the camera. The use of virtual texturing is also likely the culprit for the game’s lack of support for anisotropic texture filtering, a standard feature in PC games since the beginning of the millennium.

The pass took about 0.5 milliseconds.

Skybox generation

The game uses Unity HDRP’s built-in sky system, so it generates a skybox texture (a cubemap) every frame. This takes about 0.65 milliseconds which is not a lot compared to everything else, but if the game was targeting 60 FPS it would be almost 4% of the total frame time budget.

Pre-pass

Now we get to the actual rendering. C:S2 uses deferred rendering, which basically means that rendering is done in many phases and using several different intermediate render targets. The first phase is the pre-pass, which produces per-pixel depth, normal and (presumably) smoothness information into two separate textures.

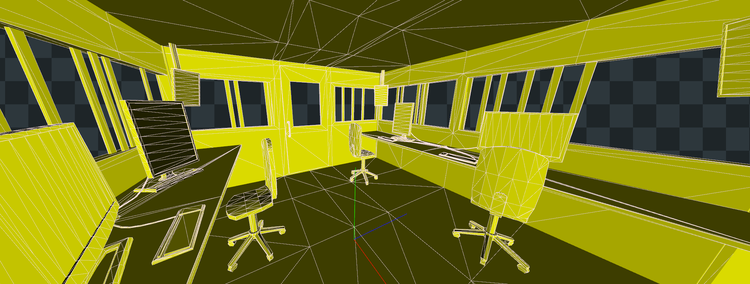

This pass is surprisingly heavy as it takes about 8.2 milliseconds, or roughly about far too long, and this is where some of the biggest issues with the game’s rendering start to appear. But first we need to talk about THE TEETH.

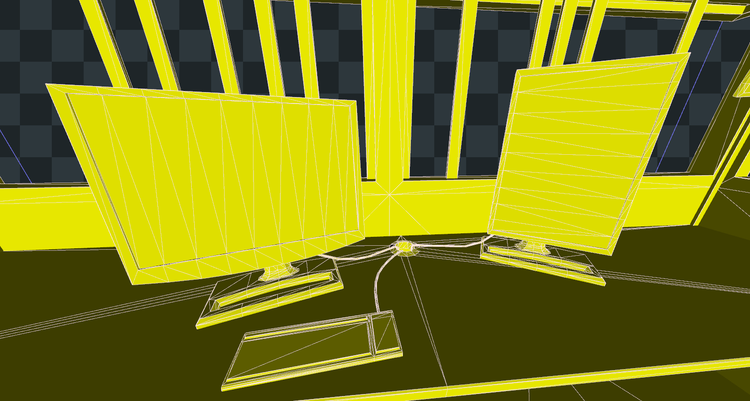

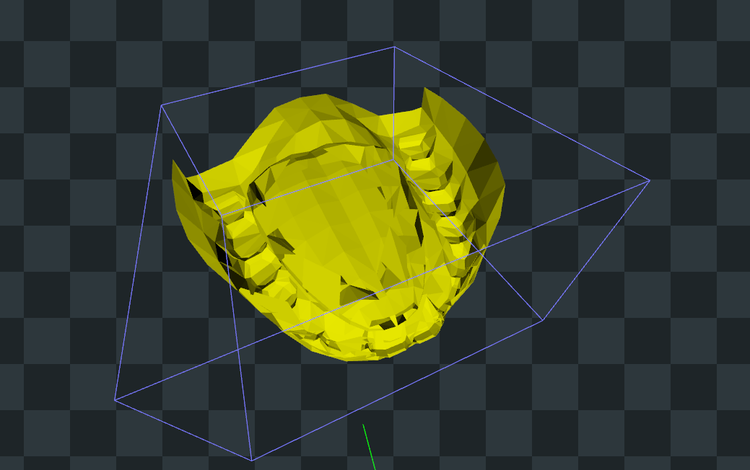

The teeth controversy

One bizarre yet popular talking point about Cities: Skylines 2’s performance is the fact that the character models have fully modelled teeth, even though there’s literally no way to see them in-game, unless we count using the photo mode and clipping the camera inside a character’s head. Reddit user Hexcoder0 did some digging using NVidia Nsight Graphics™️ and posted their findings to a thread in the official subreddit (which inspired me to do my own research and write this pointlessly long article). It was revealed that not only does the game have fully modelled teeth, they are rendered literally all the time at maximum quality. More importantly this is the case for everything related to characters: none of the character meshes have any LOD variants. Colossal Order was quick to acknowledge this publicly, and they even referenced broader problems with LOD handling. Ignore all the weird rambling about simulating citizens’s teeth and whatnot; this is not Dwarf Fortress so they are not doing that, and even if they were that obviously wouldn’t require rendering the teeth.

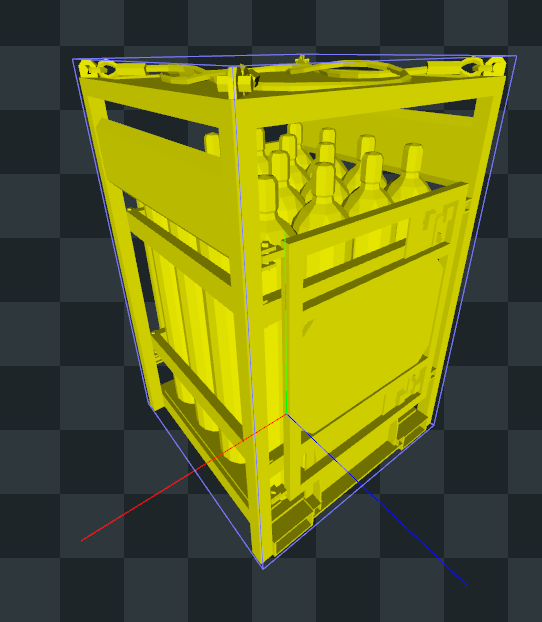

Colossal Order has also told us that that they are using a middleware called Didimo Popul8 to generate the character models. If I recall correctly the teeth controversy began even before the game was released when someone noticed that the Didimo character specification includes separate meshes for things like teeth and eyelashes. I had originally assumed that the game is using Didimo’s default character meshes — because to be honest they look very generic and soulless — but now I’m not so sure. The meshes in the game in fact have even more polygons than Didimo’s defaults: the infamous mouth / teeth model for example consists of 6108 vertices, significantly more than the default mesh’s 1060. A single character even before we add hair, clothing and accessories is about 56 thousand vertices, which is a lot. For context the average low-density residential building uses less than 10 thousand vertices before yard props and other details are added.

In this example frame the game renders 13 sets of teeth, and their visual impact on the frame is zero: not a single pixel is affected. Even the characters themselves contribute basically nothing to the frame except for noise and artifacts.

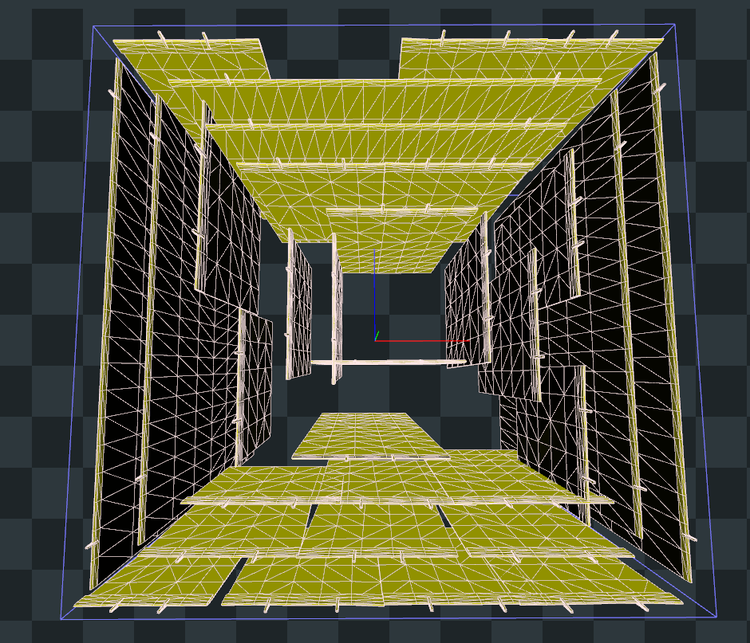

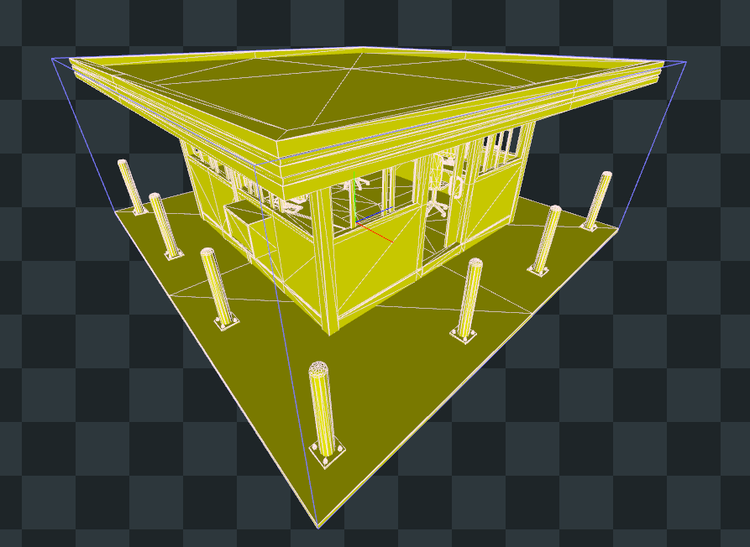

Pre-pass continued, featuring the high poly hall of shame

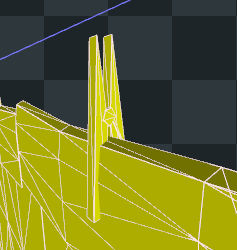

The egregiously unoptimized character models are not the sole cause of the game’s poor performance (because it’s never that easy), but they are an indicator of the broader issues with the game’s assets and rendering. The game regularly draws too many objects with too many polygons that have quite literally zero impact on the final image. This is not specific to the pre-pass, as the same issues seem to affect all rendering passes which rasterize geometry. I think there are two main causes for this:

- Some models don’t have any LOD variants at all.

- The game’s culling system is not very advanced; the custom rendering code only implements frustum culling and there’s no sign of occlusion culling at all. There is some culling based on distance but it’s not very aggressive, which is great for avoiding pop-in but bad for performance.

Here are a few other examples besides the character models.

Now you might say that these are just cherry-picked examples, and that modern hardware handles models like these just fine. And you would be broadly correct in that, but the problem is that all of these relatively small costs start to add up, especially in a city builder where one unoptimized model might get rendered a few hundred times in a single frame. Rasterizing tens of thousands of polygons per instance per frame and literally not affecting a single pixel is just wasteful, whether or not the hardware can handle it. The issues are luckily quite easy to fix, both by creating more LOD variants and by improving the culling system. It will take some time though, and it remains to be seen if CO and Paradox want to invest that time, especially if it involves going through most of the game’s assets and fixing them one by one.

To be clear having highly detailed models is not a problem in itself, especially if you are intending to make a self-proclaimed next generation city builder. The problem is that the game is struggling to handle this level of detail, and that polygons are used inefficiently and inconsistently. For every character model with opulently modelled nostril hairs there are common props with surprisingly low polycounts. I think if the game ran well people would be celebrating these highly detailed models and making hyperbolic social media posts and clickbait videos titled “OMG the devs thought of EVERYTHING 🤯🤯🤯” and “I can’t believe they modelled the cables in the parking booth 😱😱😱” and “CITY SKYLINES 2 MOST DETAILED GAME EVER CONFIRMED?”. Instead we are here.

Oh yeah I was talking about rendering at some point, wasn’t I? Let’s continue.

Motion vectors

The game renders per-pixel motion vectors as a separate pass, which can be used for anti-aliasing and motion blur. I think motion vectors are slightly broken now, which is also the reason the game doesn’t support DLSS or FSR2 at the time of writing. There is a temporal anti-aliasing option hidden in the advanced settings menu and it improves the rendering quality to some extent, but things animated using vertex shaders like trees are just covered in artifacts and ghosting.

This pass takes about 0.6 milliseconds.

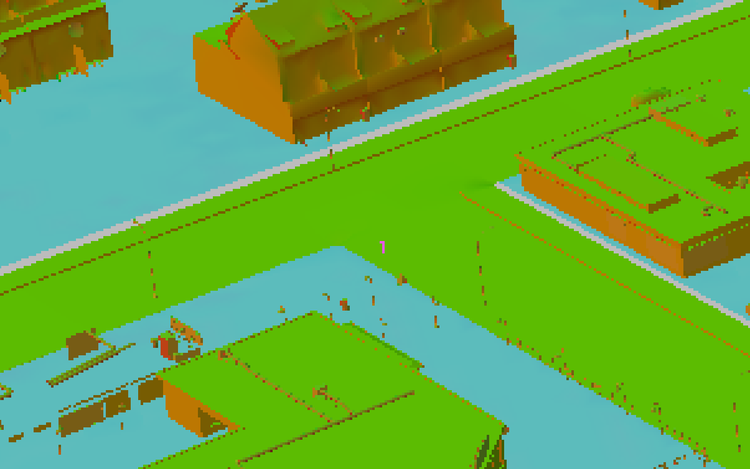

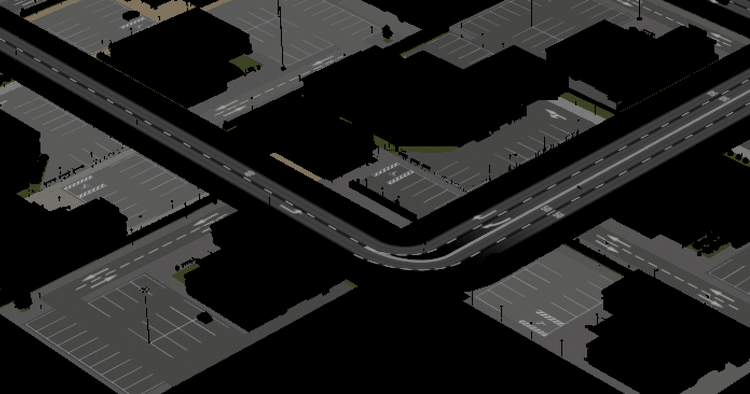

Roads and decals

Now we are finally rendering something recognizable: roads! And lawns, and other things that follow the surface of the terrain.

This pass takes about 1 millisecond.

Main pass

This is the meat (vegan alternatives are available) of the deferred rendering process. This pass takes in all the intermediate render targets produced so far alongside the virtual texture caches and some seemingly hardcoded textures and produces several more buffers, including ones for albedo, normals, different PBR properties and depth. It also produces the virtual texture visibility information I mentioned earlier. It is rendered at half horizontal resolution, presumably as an optimization. Terrain doesn’t use virtual texturing, so it is rendered at full resolution and with a constant color regardless of the actual terrain texture.

This pass takes 16.7 milliseconds, or about as long the entire frame should take if we were aiming for 60 frames per second. The pass rasterizes all of the geometry again, so the same reasons for the pre-pass being slow apply here as well. The additional cost is probably explained by the number of additional outputs, plus the cost of virtual texture cache lookups and texture mapping itself.

Ambient occlusion

Next the game produces an ambient occlusion buffer using motion vectors, normals and the depth buffer plus copies of the last two from the previous frame. Judging by the debug names of the shaders the algorithm is GTAO. This takes about 1.6 milliseconds.

Cascaded shadow mapping

C:S2 uses cascaded shadow mapping, and in my opinion not very well. Shadows are full of artifacts and constantly flickering especially when either the sun or any foliage are moving (and they are, all of the time). Even when the screen isn’t completely covered with artifacts, the resolution of the shadows is quite low, and the jump in quality between the different shadow cascades is very noticeable.

The game uses four cascades with a resolution of 2048x2048 pixels per cascade. There’s a directional shadow map resolution setting in the advanced graphics settings menu, but at the time of writing it’s not connected to anything in the code; neither the individual setting nor the overall shadow quality setting alters the resolution of the shadow map. This is the reason why the medium and the high shadow setting presets are literally identical. I don’t know whether this is an oversight or if the setting was hastily disabled because it was causing issues. The low preset differs from medium and high in that it disables shadows cast by the terrain.

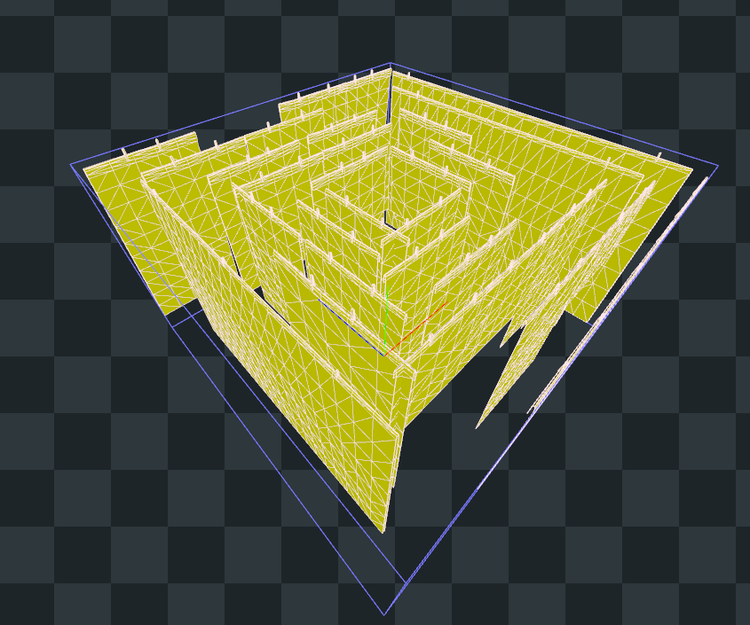

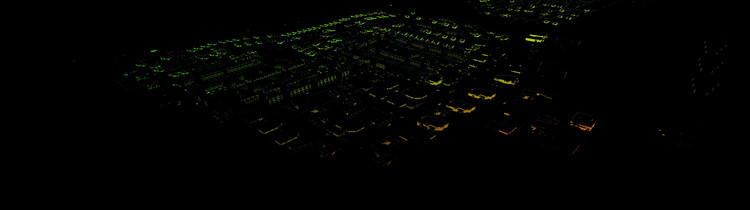

Despite the low quality, it is by far the slowest rendering pass, taking about 40 milliseconds or almost half of total frametime. It also dwarfs all other passes in terms of the number of draw calls: in my test frame 4828 out of 6705 draw calls were for shadow mapping, a staggering 72%. This is why there’s such a huge performance gain when shadows are disabled.

The reasons behind this pass’s slowness are mostly the same as with the pre-pass and the main pass: too much unnecessary geometry rendered with way too many draw calls. Renderdoc’s performance counters view indicates that many of the draw calls affect between zero and under 100 pixels in the shadow map, and the teeth are back again. The game seems to treat every single 3D object as a potential shadow caster on all quality settings regardless of size or distance. There’s a lot of room for optimization here, and in theory general improvements to LODs and culling should have a large impact on shadow mapping performance as well. Hopefully after performance has been improved CO (or modders) can turn the shadow quality setting back up, and raise the shadow map resolution to something more 2023.

Let’s end this part on a positive side note: when digging into shadow handling code I stumbled upon the fact that the game computes the positions of the sun and the moon using the current date, time and coordinates of the city. That’s a really neat detail!

Screen space reflections and global illumination

The game uses Unity HDRP’s built-in implementations of screen space reflections (SSR) and screen space global illumination (SSGI). I won’t be covering them in detail because Unity’s documentation is already decently comprehensive, plus I’m not going to pretend I fully understand them. Global illumination uses ray-marching and is evaluated by default at half resolution. Denoising and temporal accumulation are used to improve the quality. It would be nice if the game as a self-proclaimed next generation city builder supported hardware accelerated ray tracing in addition to these screen space solutions, but I’m not holding my breath.

These two effects combined took about 3 milliseconds.

Deferred lighting

This is where it all comes together. Most of the intermediate buffers produced so far are combined to render the near-final image. Not much more to say about this pass, except that it takes about 2.1 milliseconds.

Weird clothing pass

There’s a small rendering pass just for the clothes of the Didimo characters, in this case 3 dresses, 1 jumpsuit and 1 set of suit trousers. The remaining 8 characters are either naked or their clothes use different shaders. This pass affects almost no pixels at this zoom level. Luckily it takes just 0.2 milliseconds.

Sky rendering

The sky is rendered next using the previously generated skybox texture, though it is not visible in my example frame. This pass takes about 0.3 milliseconds.

Transparent objects pre-pass

Traditional deferred rendering doesn’t work with transparent objects, so they are rendered separately. Transparent objects are rendered in two phases, starting with this pre-pass which only updates the normal and depth buffers. There are not many unique transparent objects in the frame, so this pass takes about 0.12 milliseconds.

Water rendering

The game does some pre-processing in compute shaders to prepare for water rendering and then produces several downscaled and blurred versions of the almost-final image. These inputs are fed to the main water rendering shader which renders the water surface. This takes about 1 millisecond.

Particles, rain and transparent objects

This pass handles most things transparent, including particles, weather effects and 3D objects made of glass and other transparent materials. No particles are visible in the frame, but the game still tries to render the smoke from the industrial zone’s chimneys, as well as the stream of goop produced by the sewage pipe. Rain is rendered next, using 20 instances of 12K vertices each. Interestingly the remaining transparent objects are rendered after the rain, causing some weirdness when transparent objects (like greenhouses and power lines) and rain overlap. All of this takes about 0.56 milliseconds.

VT feedback processing

The virtual texture visibility buffer we got earlier is processed with a compute shader, resulting in an output texture 1/16th of the original resolution. For the visualization I nearest neighbor scaled the output 8x to make it more readable. This is the information the game ultimately gets back from the GPU to decide which texture tiles to load and unload. Renderdoc reported very little time spent on this, well under 0.1 milliseconds.

Bunch of post-processing

The game uses many of Unity’s built-in post-processing effects, including temporal AA (which is a bit broken as I previously mentioned), bloom and tonemapping, plus DOF and motion blur if enabled. I can’t be bothered to sum up the timings of all of these, but it’s about 1 to 2 milliseconds in total.

Outlines, text and other UI

The last remaining draw calls are used to render all of the different UI elements, both the ones that are drawn into the world as well as the more traditional UI elements like the bottom bar and other controls. Quite a lot of draw calls are used for the Gameface-powered UI elements, though ultimately these calls are very fast compared to the rest of the rendering process. The names of roads are rendered into the scene using 2D signed distance fields. The depth buffer is used to blend the text with the scene if the text is behind a building or other object, which is a nice touch. This final pass takes an irrelevant amount of time.

And we are done!

I tried not to make this into an in-depth graphics study, but I think I failed. Hope you learned something new.

Summary and conclusions

So why is Cities: Skylines 2 so incredibly heavy on the GPU? The short answer is that the game is throwing so much unnecessary geometry at the graphics card that the game manages to be largely limited by the available rasterization performance. The cause for unnecessary geometry is both the lack of simplified LOD variants for many of the game’s meshes, as well as the simplistic and seemingly untuned culling implementation. And the reason why the game has its own culling implementation instead of using Unity’s built in solution (which should at least in theory be much more advanced) is because Colossal Order had to implement quite a lot of the graphics side themselves because Unity’s integration between DOTS and HDRP is still very much a work in progress and arguably unsuitable for most actual games. Similarly Unity’s virtual texturing solution remains eternally in beta, so CO had to implement their own solution for that too, which still has some teething issues.

Here’s what I think that happened (a.k.a this is speculation): Colossal Order took a gamble on Unity’s new and shiny tech, and in some ways it paid off massively and in others it caused them a lot of headache. This is not a rare situation in software development and is something I’ve experienced myself as well in my dayjob as a web-leaning developer. They chose DOTS as the architecture to fix the CPU bottlenecks their previous game suffered from and to increase the scale & depth of the simulation, and largely succeeded on that front. CO started the game when DOTS was still experimental, and it probably came as a surprise how much they had to implement themselves even when DOTS was officially considered production ready. I wouldn’t be surprised if they started the game with Entities Graphics but then had to pivot to custom solutions for culling, skeletal animation, texture streaming and so on when they realized Unity’s official solution was not going to cut it. Ultimately the game had to be released too early when these systems were still unpolished, likely due to financial and / or publisher pressure. None of these technical issues were news for the developers on release day, and I don’t believe their claim that the game was intended to target 30 FPS from the beginning — no purebred PC game has done that since the early 2000s, and the graphical fidelity doesn’t justify it.

While I did find a lot to complain about the game’s technology, this little investigation which has been consuming a large share of my free time for the past 1.5 weeks has also made me appreciate the game’s lofty goals and sympathize more with the developers of this technically ambitious yet troubled game. I’ve learned a lot about how Cities: Skylines 2 & Unity HDRP work under the hood, and I’ve also gotten some good practice with Renderdoc.

If you liked this article, good for you! I don’t have anything to sell you. Write a comment or something to the media aggregator or social media of your choice. Subscribe to my Atom feed if it still works. Stay tuned for my next article in a couple of years.

Added on 2023-11-06: FAQ and other notes

Wow this blew up, thanks for the gold kind stranger and all that. I’ve seen a lot of comments and questions about this article and I’ve tried to answer most of them, but they’ve been getting kind of repetitive so I figured I’d add a FAQ section here to clarify and expand on some things.

You mentioned you got 7 FPS in the main menu. Why was it so slow?

Even though the laggy menu was what motivated me to start investigating the game’s performance, I ultimately wasn’t able to figure out what was causing it. Well, not fully. Even before opening Renderdoc for the first time I noticed that if you exit the game from the main menu, you can see a brief glimpse of sky and water. Renderdoc confirmed my suspicions: even in the main menu there’s always a 3D scene, with terrain, water and a skybox. The scene is rendered to relative completion, but then it gets fully covered by the user interface. The full rendering pipeline is used even for this invisible scene, which explains why graphics settings have an instant effect on performance even in the main menu. Combine this with the fact that at least on launch the game maxed most if not all settings by default, including the very heavy effects the developers advised against using, and you get a recipe for a slideshow.

However, that alone doesn’t explain why the framerate was in the single digits, and the boring answer is that I was never able to replicate this level of performance again. It wasn’t just me though; most if not all of my friends who played the game on release experienced the same thing on the first launch. The game does a virtual texture cache processing thing on the first start and presumably when assets have changed, but I haven’t checked if it even uses the GPU for that. So this will remain a mystery for now.

Quick facts about the main menu scene (including both the background and the menu on top):

- About 400 draw calls

- 563k input vertices

- 745k rasterized triangles

How many total vertices and triangles are there in the scene?

According to Renderdoc’s performance counters rendering the frame involved 121 million input vertices and about 36 million rasterized triangles. This is not the total number of polygons that are visible on the screen, but instead the amount of geometry that is processed across all render passes. This is frankly an insane amount of geometry for a game like this, and it’s no wonder the game is struggling to keep up. I’ve seen some reports on Reddit that the game can reach hundreds of millions of vertices in larger cities, even up to a billion per frame in certain situations.

Do you think the game should have been made with Unreal Engine 5 or some other engine instead?

Boring consultant answer: it depends. In an ideal world where budgets and deadlines don’t exist, Cities: Skylines 2 should have probably been made with a fully custom engine (or at least fully custom renderer), because neither of the big two engines is really designed for a game like this. Unreal Engine 5 does include solutions for many of the issues C:S2 is currently suffering from; there’s Nanite for LODs and Lumen & Virtual Shadow Maps for lighting and shadows. However UE5 has its own share of limitations: it doesn’t offer anything like Unity ECS for gameplay logic and large scale simulations (except for Mass, which is probably far from production ready), and as an engine where the primary programming language is C++ it’s far less flexible and accessible in terms of modding, which was a big part of the original game’s success. Both issues are fixable with enough time and money, but we should remember that CO is still a relatively small company and they have to pick their battles.

Renderdoc is unreliable for benchmarking, you should have used [insert other tool here] instead.

I probably should have, if I had gotten the alternatives to work. I learned from a helpful comment that apparently Nvidia in their infinite wisdom dropped D3D11 profiling support from Nsight (™️) some time ago, so if I wanted to profile rendering performance properly and get better insights the solution would be to downgrade the software to an older version.

Ultimately the goal wasn’t really to benchmark the performance, but instead to focus on the big picture and to find an answer to the question “what is the game doing that makes it so slow?”. I believe I’ve found enough evidence to answer that question, even if individual timings might be off by some margin.

something something can’t believe this game has no LODs anywhere

That’s not a question.

To be clear the game does have LODs for some / many of the objects. Overall I’d say that most if not all buildings have proper LODs, but many decorations and props like pipes and yard props tend not to have any. Furthermore I don’t even know if the issue is that these props literally don’t have any LODs, or if the LODs are just never picked for some reason. Maybe they have autogenerated LODs but the result were so bad that they were disabled? No idea.

You mentioned the game includes InstaLOD. What is its role in the game?

Decompiled code indicates that InstaLOD is included for the game’s asset pipeline (basically for mod tools), and it doesn’t seem to be used outside of that. So InstaLOD is only used when importing new assets into the game.

I hate JavaScript. Hating JavaScript and / or the modern web is one of my most defining personality traits. The game uses JavaScript for the UI, which is probably why it’s so slow and ugly.

Not a question again, and not really related to the topic at hand. The data I’ve seen indicates that the UI is not a significant bottleneck, and won’t be any time soon. I have zero personal experience with Gameface, but it seems like a popular choice for big budget games these days, including some games that are widely considered to be well optimized. The most important thing to know about it is that it’s not based on a full browser engine like Electron is, but instead it’s a fully custom user interface framework that implements a subset of modern web technologies specifically for game UI use cases. That should mean significantly smaller memory footprint and better performance than something based on Chromium / Blink or WebKit.

How many question can you come up with? Don’t you have anything better to do?

Indeed I do. That was the last one.